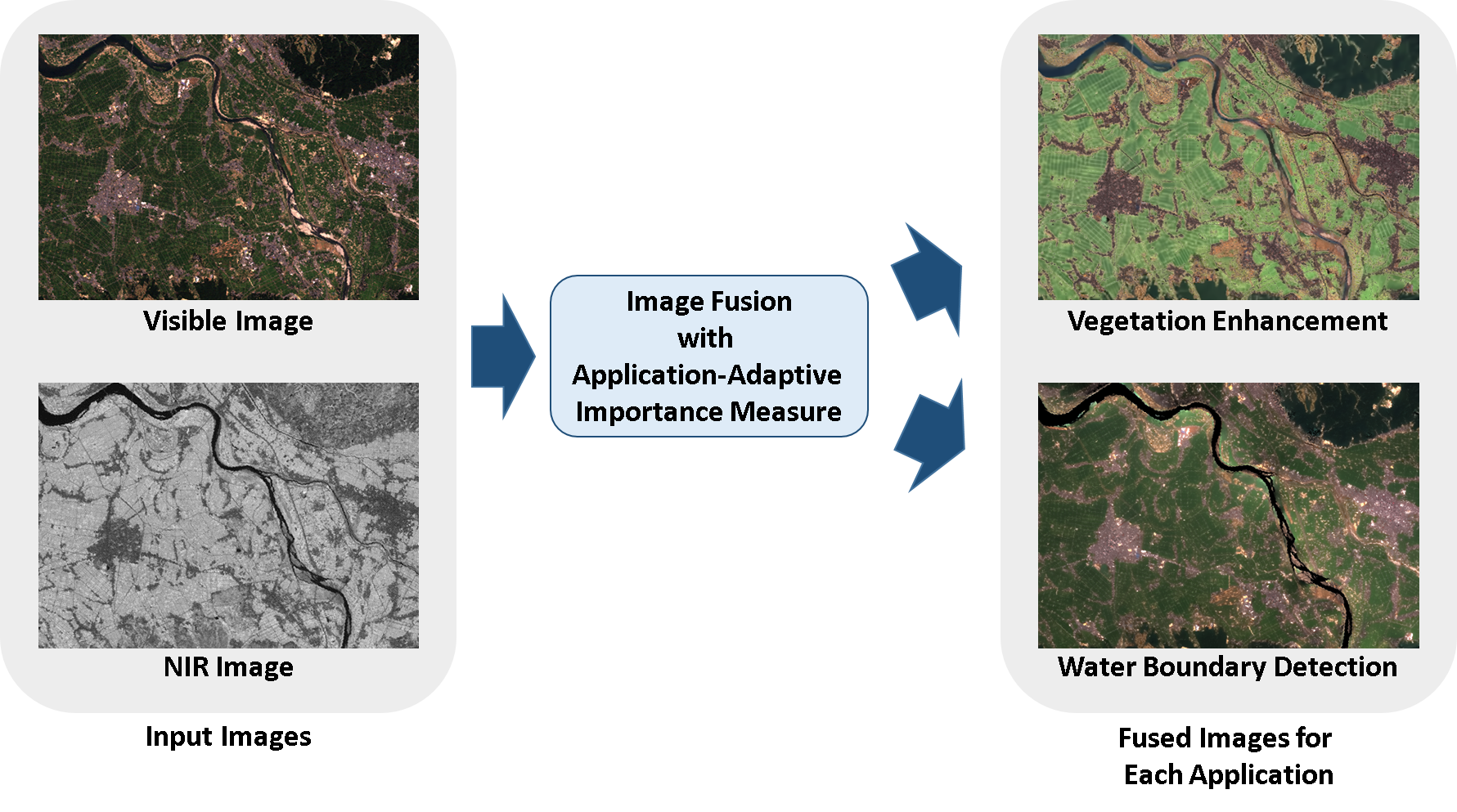

Multi-Modal/Spectral Image Fusion

Unified Image Fusion Framework

with Application-Adaptive Importance Measure

Takashi Shibata, Masayuki Tanaka, and Masatoshi Okutomi

|

We present a novel unified image fusion framework based on an application-adaptive importance measure. In the proposed method, an important area is selected pixel-by-pixel using the importance measure which is designed for each image type in each application. Then, the fused intensity is generated by a Poisson image editing. The main contribution is to provide a generalized image fusion framework enables us to deal with various different types of images for many applications. Experimental results show that the proposed method is effective for various applications including depthperceptible image enhancement, temperature-preserving image fusion, optical flow fusion, and haze removal.

Misalignment-Robust Joint Filter

for Cross-Modal Image Pairs

Takashi Shibata, Masayuki Tanaka, and Masatoshi Okutomi

| Related Supplemental Movie (ICCV2017) |

Although several powerful joint filters for cross-modal image pairs have been proposed, the existing joint filters generate severe artifacts when there are misalignments between a target and a guidance images. Our goal is to generate an artifact-free output image even from the misaligned target and guidance images. We propose a novel misalignment-robust joint filter based on weight-volume-based image composition and joint-filter cost volume. Our proposed method first generates a set of translated guidances. Next, the joint-filter cost volume and a set of filtered images are computed from the target image and the set of the translated guidances. Then, a weight volume is obtained from the joint-filter cost volume while considering a spatial smoothness and a label-sparseness. The final output image is composed by fusing the set of the filtered images with the weight volume for the filtered images. The key is to generate the final output image directly from the set of the filtered images by weighted averaging using the weight volume that is obtained from the joint-filter cost volume. The proposed framework is widely applicable and can involve any kind of joint filter. Experimental results show that the proposed method is effective for various applications including image denosing, image up-sampling, haze removal and depth map interpolation.

Takashi Shibata, Masayuki Tanaka and Masatoshi Okutomi

IEEE Transactions on Computational Imaging, Vol.5, Issue:1, pp.82-96, March, 2019

Takashi Shibata, Masayuki Tanaka and Masatoshi Okutomi

Journal of Electronic Imaging, Vol.25, No.1, pp.013016-1-17, January, 2016

Takashi Shibata, Masayuki Tanaka and Masatoshi Okutomi

Proceedings of IS&T/SPIE Electronic Imaging (EI2016), February, 2016

Takashi Shibata, Masayuki Tanaka and Masatoshi Okutomi

Proceedings of IS&T/SPIE Electronic Imaging (EI2015), Vol.9404, pp.94040G-1-6, February, 2015

Takashi Shibata, Masayuki Tanaka and Masatoshi Okutomi

Proceedings of IEEE International Conference on Image Processing (ICIP2015), pp.1-5, September, 2015

Takashi Shibata, Masayuki Tanaka and Masatoshi Okutomi

Proceedings of IEEE International Conference on Computer Vision (ICCV2017), pp.3315-3324, October, 2017

Copyright (C) Okutomi-Tanaka Lab. All Rights Reserved.