Segmentation-Guided Neural Radiance Fields for Novel Street View Synthesis

Yizhou Li*, Mizuki Morikawa*, Yusuke Monno*, Yuuichi Tanaka**, Seiichi Kataoka**, Teruaki Kosiba** and Masatoshi Okutomi*

* Institute of Science Tokyo

** Micware CO., LTD.

Abstract

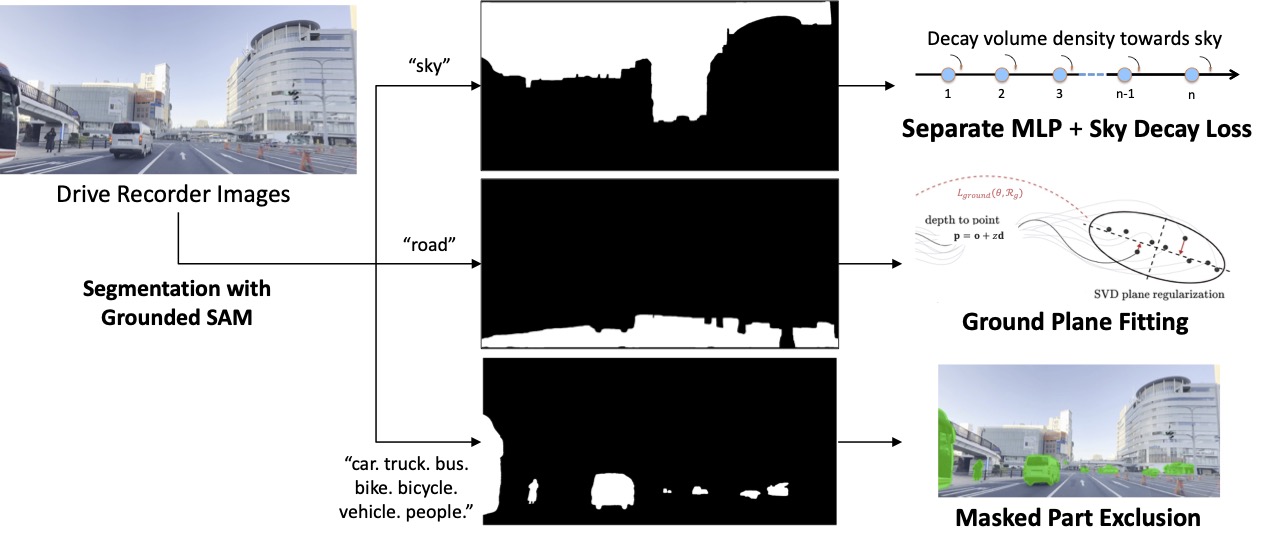

Recent advances in Neural Radiance Fields (NeRF) have shown great potential in 3D reconstruction and novel view synthesis, particularly for indoor and small-scale scenes. However, extending NeRF to large-scale outdoor environments presents challenges such as transient objects, sparse cameras and textures, and varying lighting conditions. In this paper, we propose a segmentation-guided enhancement to NeRF for outdoor street scenes, focusing on complex urban environments. Our approach extends ZipNeRF and utilizes Grounded SAM for segmentation mask generation, enabling effective handling of transient objects, modeling of the sky, and regularization of the ground. We also introduce appearance embeddings to adapt to inconsistent lighting across view sequences. Experimental results demonstrate that our method outperforms the baseline ZipNeRF, improving novel view synthesis quality with fewer artifacts and sharper details.

Video Results

Publications

Segmentation-Guided Neural Radiance Fields for Novel Street View Synthesis

Yizhou Li, Yusuke Monno, Masatoshi Okutomi, Yuuichi Tanaka, Seiichi Kataoka, and Teruaki Kosiba

International Conference on Computer Vision Theory and Applications 2025 (VISAPP 2025)

車載カメラ映像からのセグメンテーションマスクを用いた自由視点画像生成

森川 瑞生, 李 一洲, 紋野 雄介, 田中 祐一, 片岡 誠一 , 小柴 輝明, 奥富 正敏

第31回画像センシングシンポジウム (SSII 2025)

Exhibition

Novel View Synthesis Using Car-Mounted Camera

Micware Co. Ltd.

Mobile World Congress 2025 (MWC2025)