ProCamCalib – Samuel Audet's Research

A User-Friendly Method to Calibrate Cameras and Projector-Camera Systems

Figure 1. Snapshot of our demo video showing the test user

calibrating a casually installed projector-camera system.

Projector-camera systems promise to deliver new kinds of computer applications, such as spatial augmented reality [2], covering a wide range of potential users. For this reason, we desire systems that anyone could easily use. In our opinion, this includes geometric calibration, since knowledge of internal and external geometric parameters is often important for proper operation, but current calibration methods are not user-friendly. The required manipulations can also become a burden to researchers.

Current methods either operate in two phases, use structured light, or rely on color channels. We found none that satisfied us. Operating in two phases by first calibrating cameras one way, then calibrating projectors another way automatically doubles the work effort. With structured light, users cannot hold the calibration target in their hands and need to physically secure it. Finally, without color cameras, projectors, and printers, one cannot rely on color channels. Different color properties from different devices can also cause problems and complicate execution.

We propose a user-friendly method [1] based on fiducial markers typically used for augmented reality applications. Fiala and Shu [3] proposed such a method for camera-only systems. We extend it to projector-camera systems, where half of the markers are physically printed, and half are displayed using the projector. Each marker on its own carries information and can easily be identified. With only one pair of correctly detected markers we can derive a homography and prewarp all projector markers such that they do not interfere with printed ones. In addition, markers do not need color, and unlike structured light users can hold the calibration board in their hands. The user simply has to wave the calibration board, and the machine automatically captures and saves about ten good images, with which it computes calibration parameters for both the camera and the projector using Zhang's method [4]. Further, the method supports any number of cameras and projectors.

We realized a software implementation on commodity hardware and found that a user could perform full calibration of a camera and a projector in about 30 seconds. Figure 1 shows him in action on the casually installed system using a flat foam board. The full sequence can be downloaded below. From this particular session, we achieved a reprojection error of 0.33 pixels for the camera and 0.20 pixels for the projector, and can easily reproduce such results at will. Using a flatter wooden board, we obtain better results of about 0.15 pixels for both camera and projector, as shown in the screenshot below.

In contrast to other methods, because ours requires only a light board, a printed pattern, and less than one minute, we conclude that it is the most user-friendly. The subpixel accuracy achieved also compares favorably with all previous methods. Furthermore, since we based our method solely on existing theory of camera calibration [4], the actual physical 3D accuracy should translate similarly, although this remains to be proven. Other small technical issues also need polishing. In any case, we believe that the method could already be useful to other researchers. For this reason, we are making the source code available for download below. Newer versions also support color calibration.

Our demo video below features the test user during calibration.

References

| [1] | Saudet Audet and Masatoshi Okutomi. A User-Friendly Method to Geometrically Calibrate Projector-Camera Systems. The 22nd IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2009) - Workshops (Procams 2009), pages 47–54. IEEE Computer Society, June 2009. [ Paper in PDF ] |

| [2] | Oliver Bimber and Ramesh Raskar. Spatial Augmented Reality Merging Real and Virtual Worlds. A K Peters, Ltd., Wellesley, MA, USA, 2005. [ Book in PDF ] |

| [3] | Mark Fiala and Chang Shu. Self-identifying patterns for plane-based camera calibration. Machine Vision and Applications, 19(4):209–216, July 2008. [ Technical Report in PDF ] |

| [4] | Zhengyoug Zhang. A Flexible New Technique for Camera Calibration. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(11):1330–1334, 2000. [ Technical Report in PDF ] |

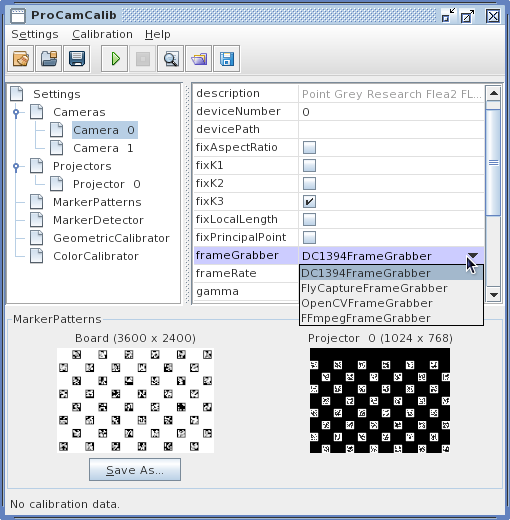

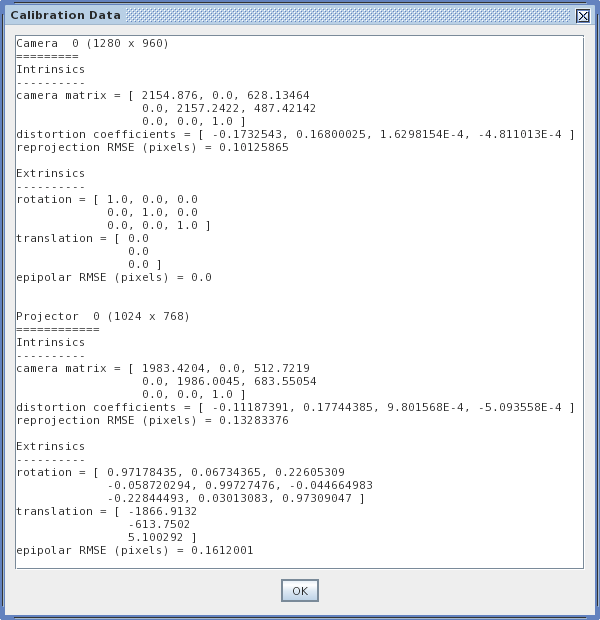

Screenshots

Figures 2 and 3 are screenshots from ProCamCalib under Java's Ocean look and feel, one of the main window and the other showing sample geometric calibration results.

Figure 2. Screenshot of the main window of ProCamCalib, where all the settings can be adjusted.

They are currently configured for calibrating two cameras and one projector.

Figure 3. Screenshot of the Calibration Data window of ProCamCalib displaying sample geometric calibration results

obtained for one camera and one projector using a flat wooden calibration board.

Download

Binary

- ProCamCalib: procamcalib-bin-20120329.zip (8.4 megs) for Linux, Mac OS X, and Windows (x86 and x86-64)

- Requires freely downloadable Java SE 6 or 7 (OpenJDK, Sun JDK, IBM JDK, Java SE for Mac OS X, etc.) and

OpenCV 2.3.1.

- Requires freely downloadable Java SE 6 or 7 (OpenJDK, Sun JDK, IBM JDK, Java SE for Mac OS X, etc.) and

Source code

- JavaCPP: javacpp-src-20120329.zip (84.5 kB)

- JavaCV: javacv-src-20120329.zip (455 kB)

- ProCamCalib: procamcalib-src-20120329.zip (56.1 kB)

- ARToolKitPlus 2.1.1t: ARToolKitPlus_2.1.1t.zip (2.8 megs)

About JavaCPP

More information on the project's home page.

About JavaCV

More information on the project's home page.

About ARToolKitPlus

More information on the original author's Web site.

Author – Samuel Audet <saudet at ok.ctrl.titech.ac.jp>

Last Modified – 2015-01-21