ProCamTracker – Samuel Audet's Research

Markerless Interactive Augmented Reality with a Projector and a Color Camera

Figure 1. Snapshot of our demo video showing the patterns

of Figure 2, where the system has aligned the projector

displayed pattern with the printed one (alongside a

chronometer, a video animation, and a red virtual ball).

Traditional applications of augmented reality superimpose generated images onto the real world through goggles or monitors held between objects of interest and the user. The display must usually follow objects and developers often choose cameras to perform tracking, as computer vision methods are flexible and nonintrusive. Since the augmented objects remain in reality unchanged, we do not need to change the image processing algorithms either. One can directly apply existing computer vision techniques for tracking or other purposes. However, with spatial augmented reality [2], we instead use video projectors to display computer graphics or data onto surfaces, as exemplified in Figure 1. In this case, the appearance of the objects may be severely affected, requiring new methods.

ProCamTracker is a user-friendly computer application (as shown in the screenshot below) to turn a perfectly normal pair of color camera and projector into a system that can track without markers a real world object (currently limited to matte planes), while simultaneously projecting on its surface geometrically corrected video images using the direct image alignment algorithm included in JavaCV, an open source library I developed as part of my doctoral research. The software package for ProCamTracker itself as well as its source code can be obtained from the download section below. More information about the algorithm itself can be found in the related CVPR 2010 paper [1], which you may cite if you find the software useful.

(a) Printed on the board. |

(b) Projector displayed. | |

| Figure 2. The images used for our demo video. | ||

Our demo video, showing the capabilities of the RealityAugmentor, first depicts a red virtual ball bouncing off the edges of the surface, alongside the patterns of Figure 2 plus a chronometer and a video animation, next features the user "clicking" on physical hyperlinks, followed by occlusion and changes in the ambient light, and finally interaction with a GUI, all being aligned robustly in real time at 30 FPS on commodify hardware.

References

| [1] | Samuel Audet, Masatoshi Okutomi, and Masayuki Tanaka. Direct Image Alignment of Projector-Camera Systems with Planar Surfaces. The 23rd IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2010). IEEE Computer Society, June 2010. [ Paper in PDF ] |

| [2] | Oliver Bimber and Ramesh Raskar. Spatial Augmented Reality Merging Real and Virtual Worlds. A K Peters, Ltd., Wellesley, MA, USA, 2005. [ Book in PDF ] |

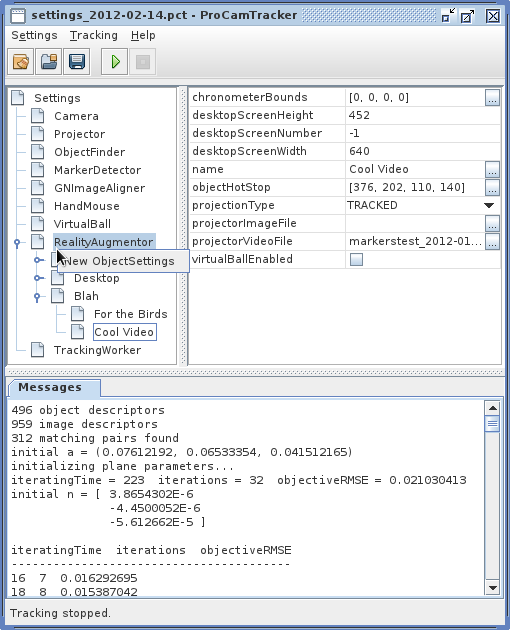

Screenshot

Figure 3 is a screenshot of the user interface of ProCamTracker under Java's Ocean look and feel.

Figure 3. Screenshot of the main window of ProCamTracker, where all the settings can be adjusted.

It was taken after a tracking session, which displayed a few output messages related to the algorithm.

Download

Binary

- ProCamTracker: procamtracker-bin-20120329.zip (8.6 megs) for Linux, Mac OS X, and Windows (x86 and x86-64)

- Requires freely downloadable Java SE 6 or 7 (OpenJDK, Sun JDK, IBM JDK, Java SE for Mac OS X, etc.) and

OpenCV 2.3.1.

- Requires freely downloadable Java SE 6 or 7 (OpenJDK, Sun JDK, IBM JDK, Java SE for Mac OS X, etc.) and

Source code

- JavaCPP: javacpp-src-20120329.zip (84.5 kB)

- JavaCV: javacv-src-20120329.zip (455 kB)

- ProCamTracker: procamtracker-src-20120329.zip (73.0 kB)

- ARToolKitPlus 2.1.1t: ARToolKitPlus_2.1.1t.zip (2.8 megs)

About JavaCPP

More information on the project's home page.

About JavaCV

More information on the project's home page.

About ARToolKitPlus

More information on the original author's Web site.

Author – Samuel Audet <saudet at ok.ctrl.titech.ac.jp>

Last Modified – 2015-01-21