Multi-Modal Pedestrian Detection with Large Misalignment

Based on Modal-Wise Regression and Multi-Modal IoU

Contributions

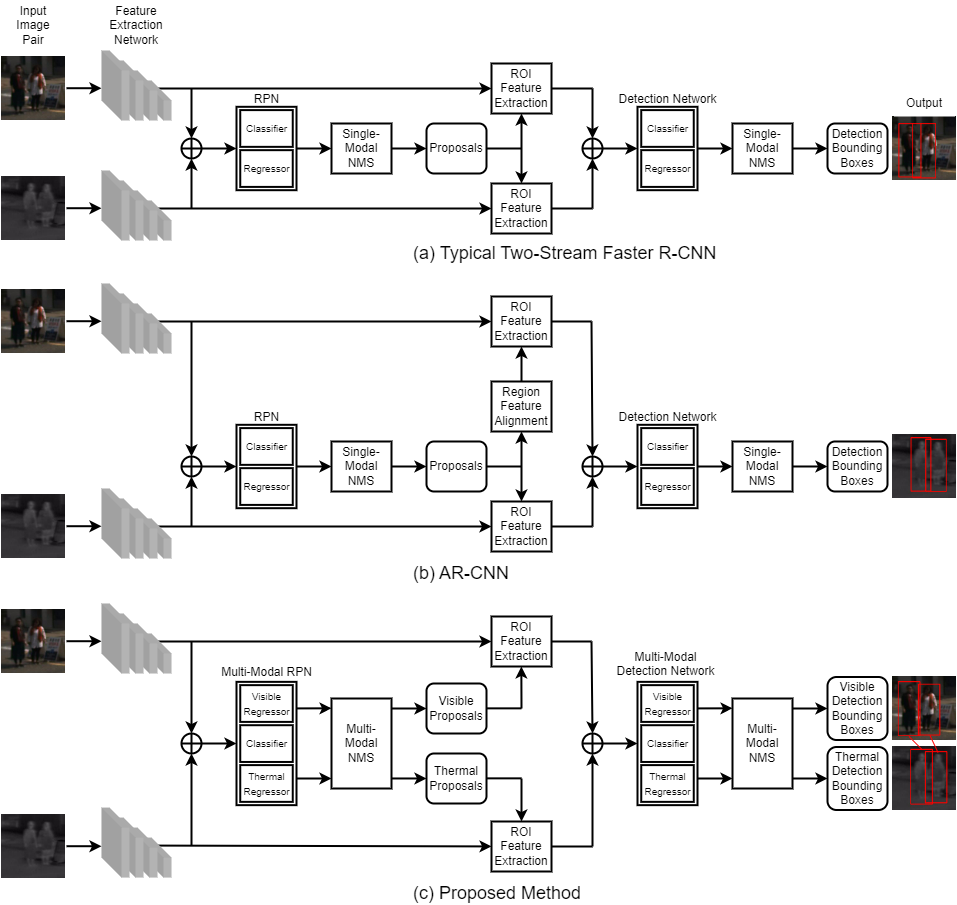

Framework Comparison

-

Comparison of multi-modal pedestrian detection frameworks based on faster R-CNN [1].

(a) Typical two-stream faster R-CNN, (b) AR-CNN [4], and (c) proposed method.

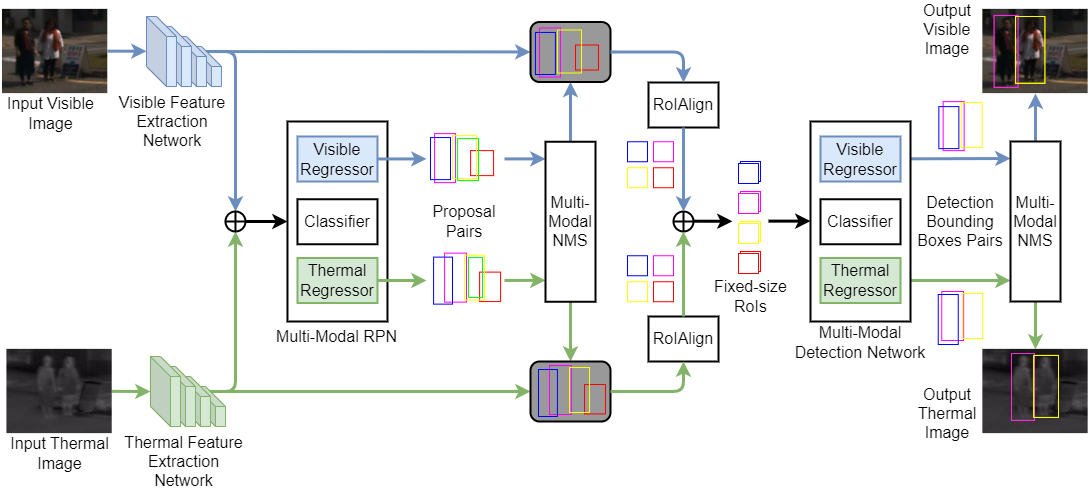

Proposed Network Overview

-

The overall architecture of our network.

We extend Faster R-CNN [1] into a two-stream network to take visible-thermal image pairs as input,

then return pairs of detection bounding boxes as output for both modalities.

Blue and green blocks/paths represent properties of visible and thermal modalities, respectively.

RoIs and bounding boxes with the same color represent their paired relations.

⊕ denotes channel-wise concatenation.

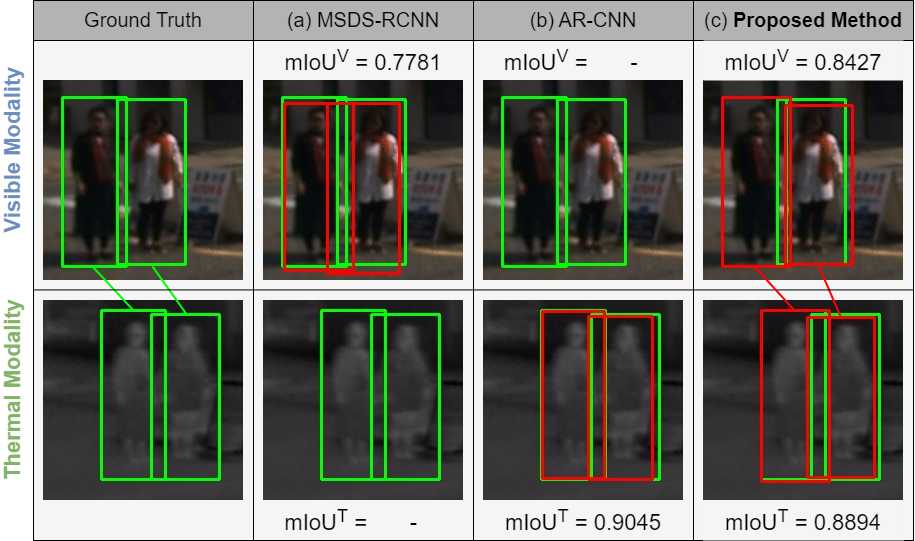

Visualization Examples

-

Qualitative comparison examples of detection results on KAIST dataset [2] of MSDS-RCNN [3],

AR-CNN [4], MBNet [5], and ours. Green bounding boxes represent ground truth

by Lu Zhang et al. [4], and red bounding boxes represent detection results. Dashed line bounding

boxes denote substituted bounding boxes for methods that do not have paired bounding boxes.

References

Publication

- Multi-Modal Pedestrian Detection with Large Misalignment Based on Modal-Wise Regression and Multi-Modal IoU [arXiv]

- Napat Wanchaitanawong, Masayuki Tanaka, Takashi Shibata, and Masatoshi Okutomi

- Proceedings of the 17th International Conference on Machine Vision Applications (MVA2021), pp.O1-1-4-1-6, July 2021.

- Multi-Modal Pedestrian Detection with Large Misalignment Based on Modal-Wise Regression and Multi-Modal IoU [SPIE]

- Napat Wanchaitanawong, Masayuki Tanaka, Takashi Shibata, and Masatoshi Okutomi

- Journal of Electronic Imaging, Vol.32, Issue 1, pp.013025-1-19, February 2023.