Multi-Modal Pedestrian Detection with Misalignment

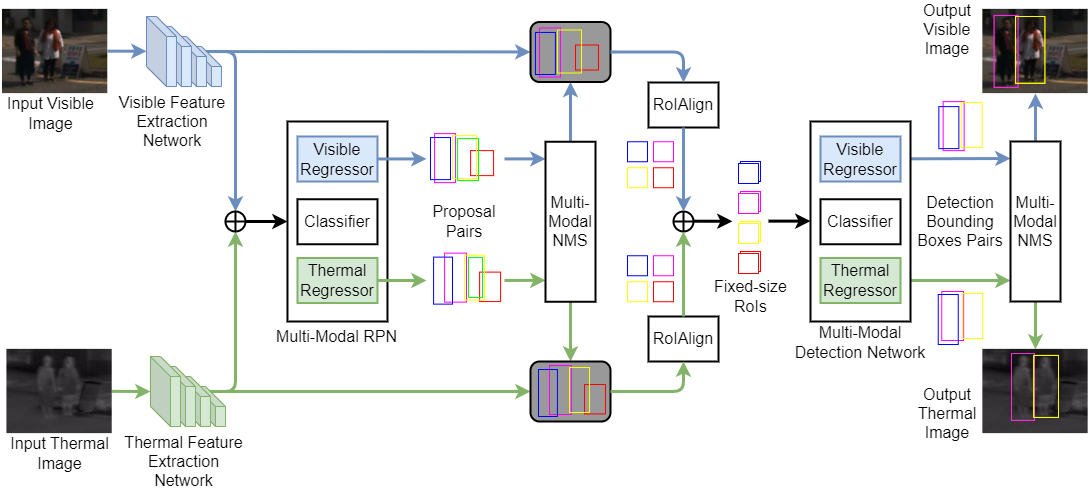

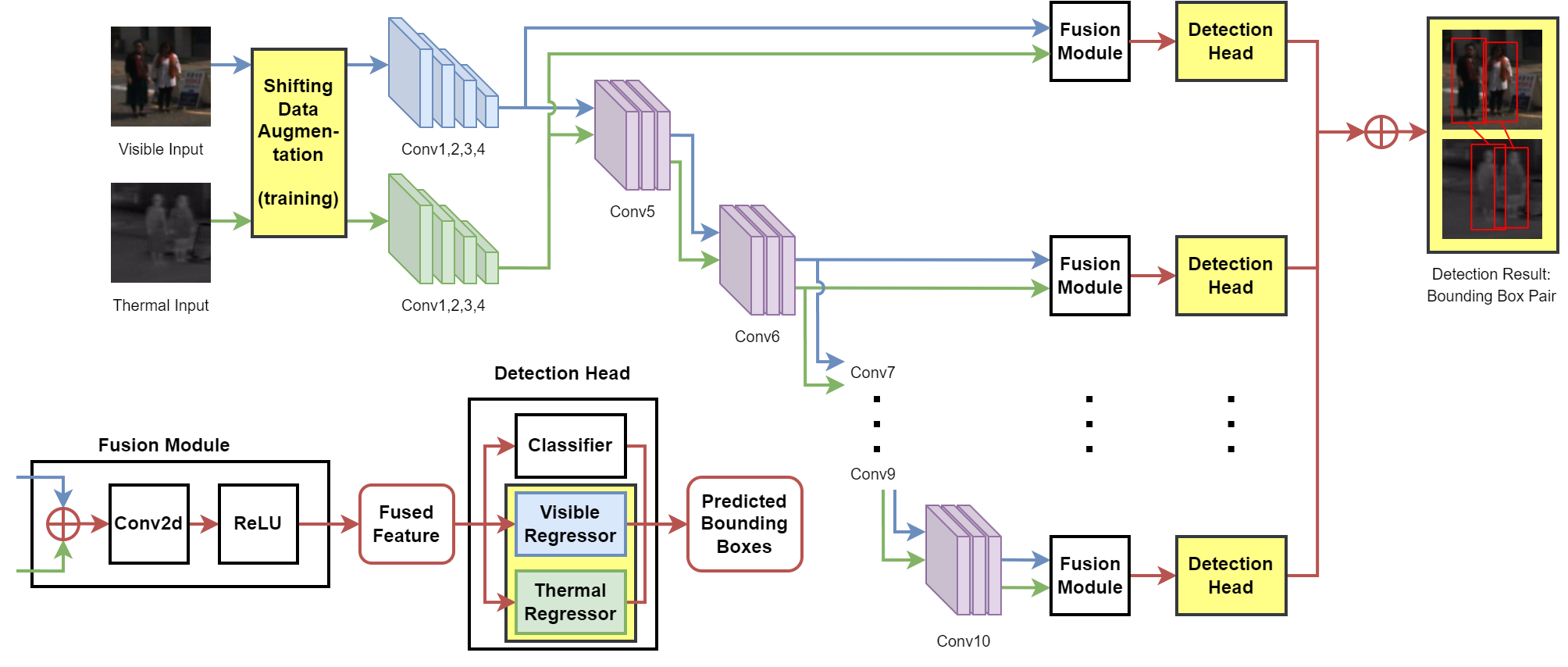

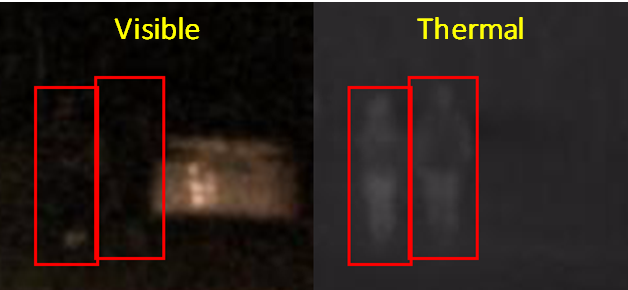

In this research, we study on pedestrian detection

from RGB (visible) and long-wavelength infrared (thermal) images when misalignment exists.

How to efficiently use the information of both modals is our main concern.