Accurate visual localization is a key challenge for autonomous navigation. Growth several applications such as self-driving cars or drones, the navigation system, robotic intelligent system, Virtual Reality (VR) or Augmented Reality (AR), and Structure from Motion (SfM), often require the accurate self-pose information. We aim to predict 6 degree-of-freedom (6DoF) pose of an input image mostly taken by low-cost devices (e.g., hand-held cameras, smartphones) with respect to a large-scale database consists of image set or 3D map, which have been collected beforehand (e.g., street-view images taken by car-mount camera system, 3D point-cloud captured by laser rangefinder).

We collect several new datasets consist of database capturing large-scale visual localization situation (e.g., city-scale street-view image set, building-scale indoor 3D map) and query photographs taken at different conditions than the database (i.e., different device, time, viewpoint, etc.), representing the realistic visual localization problem. We also propose new localization methods that are scalable and robust to these challenging visual localization situations.

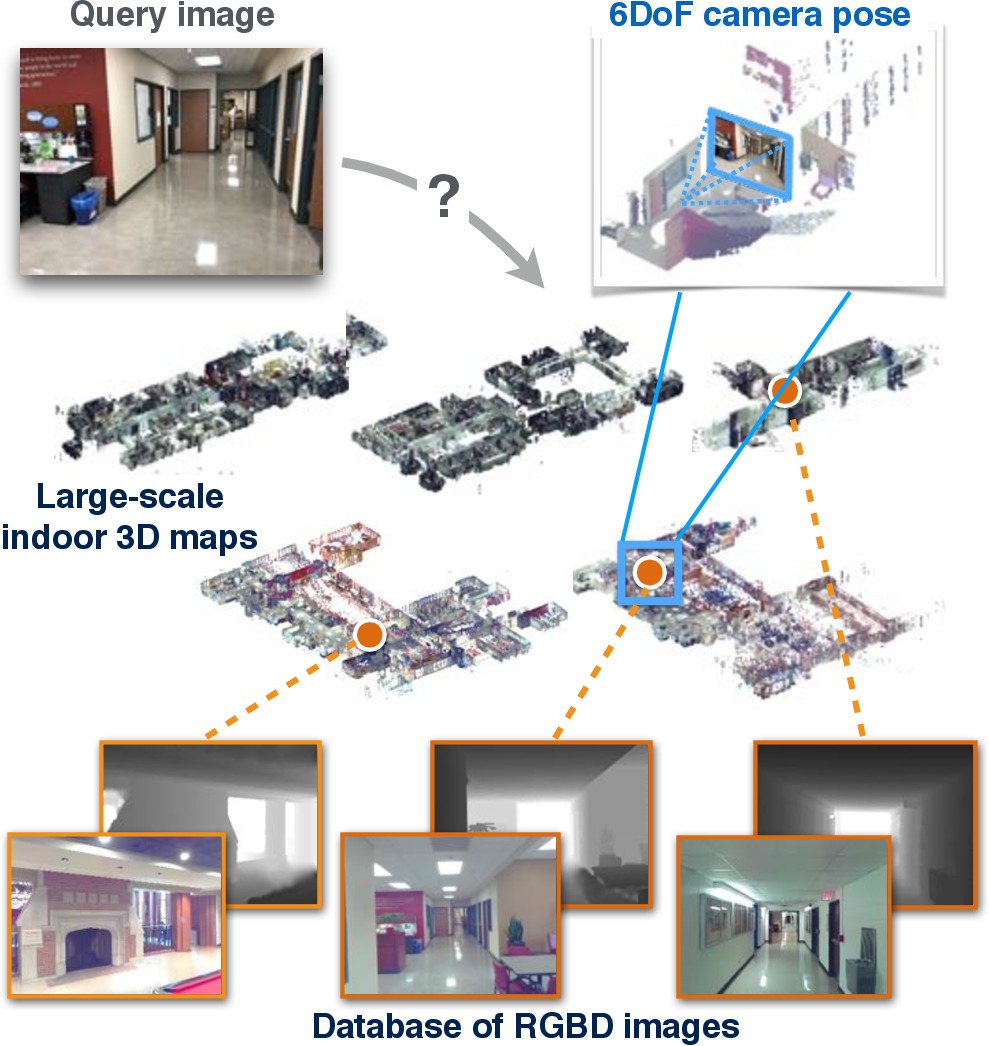

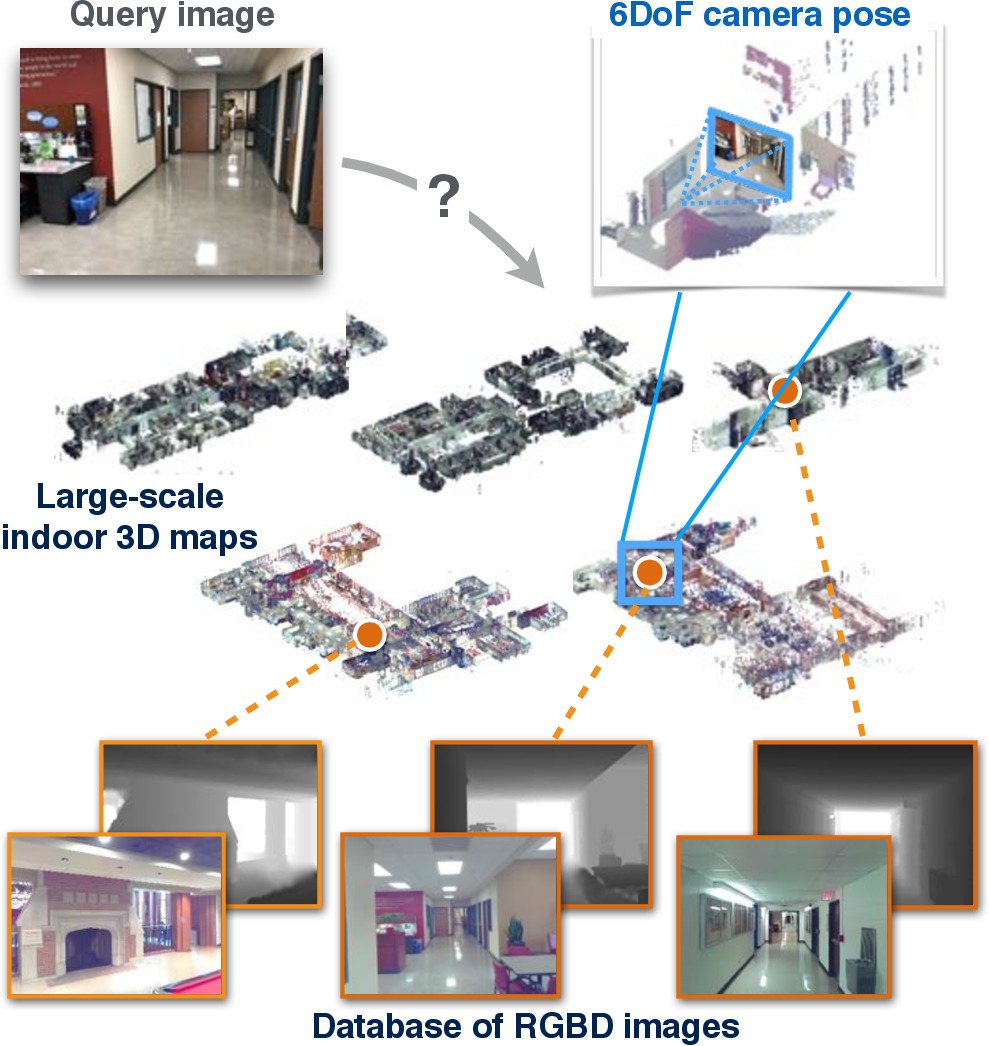

We seek to predict 6 degree-of-freedom (6DoF) pose of a query photograph with respect to a large indoor 3D map.

(1) We propose a new large-scale visual localization method targeted for indoor environments.

(2) We create a new dataset for large-scale indoor localization presenting a realistic indoor localization scenario.

Hajime Taira, Masatoshi Okutomi, Torsten Sattler, Mircea Cimpoi, Marc Pollefeys, Josef Sivic, Tomas Pajdla, Akihiko Torii. InLoc: Indoor Visual Localization with Dense Matching and View Synthesis. In: CVPR, 2018. [Project page incl. dataset]

Hajime Taira, Ignacio Rocco, Jiri Sedlar, Masatoshi Okutomi, Josef Sivic, Tomas Pajdla, Torsten Sattler, Akihiko Torii. Is This The Right Place? Geometric-Semantic Pose Verification for Indoor Visual Localization. In Proc. ICCV2019. [Project page incl. dataset]

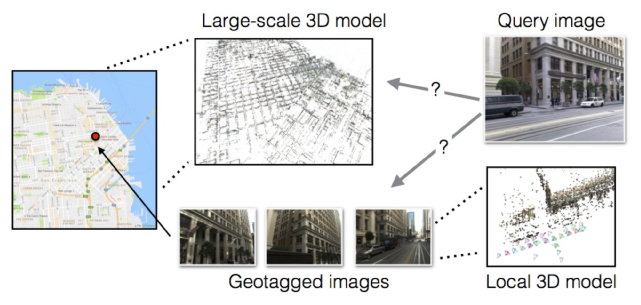

Historically, approaches for visual localization on large-scale outdoor environments are divided into two categories: 2D image-based localization and 3D structure-based localization. Each approach developed independently, but the question is: which manner should we follow for accurate visual localization?

(1) We compare state-of-the-art localization methods on both streams using our new dataset that enables to measure the 6DoF pose estimation accuracy.

(2) We demonstrate experimentally that large-scale 3D models are not strictly necessary for accurate visual localization. We show that combining image-based methods with local reconstructions results in a pose accuracy similar to the state-of-the-art structure-based methods.

Torsten Sattler, Akihiko Torii, Josef Sivic, Marc Pollefeys, Hajime Taira, Masatoshi Okutomi, Tomas Pajdla. Are Large-Scale 3D Models Really Necessary for Accurate Visual Localization? In: CVPR, 2017. [Project page incl. dataset]

Copyright (C) Okutomi-Tanaka Lab. All Rights Reserved.