CNN-based Image Recognition for Degraded Images

The objective of this project is to construct a recognition network for degraded images.

- Ensemble Approach

- Feature Adjustor

- Layer-Wise Feature Adjustor

- Multi-Scale and Top-K Confidence Map Aggregation

- Diffusion-Based Adaptation for Classification of Unknown Degraded Images

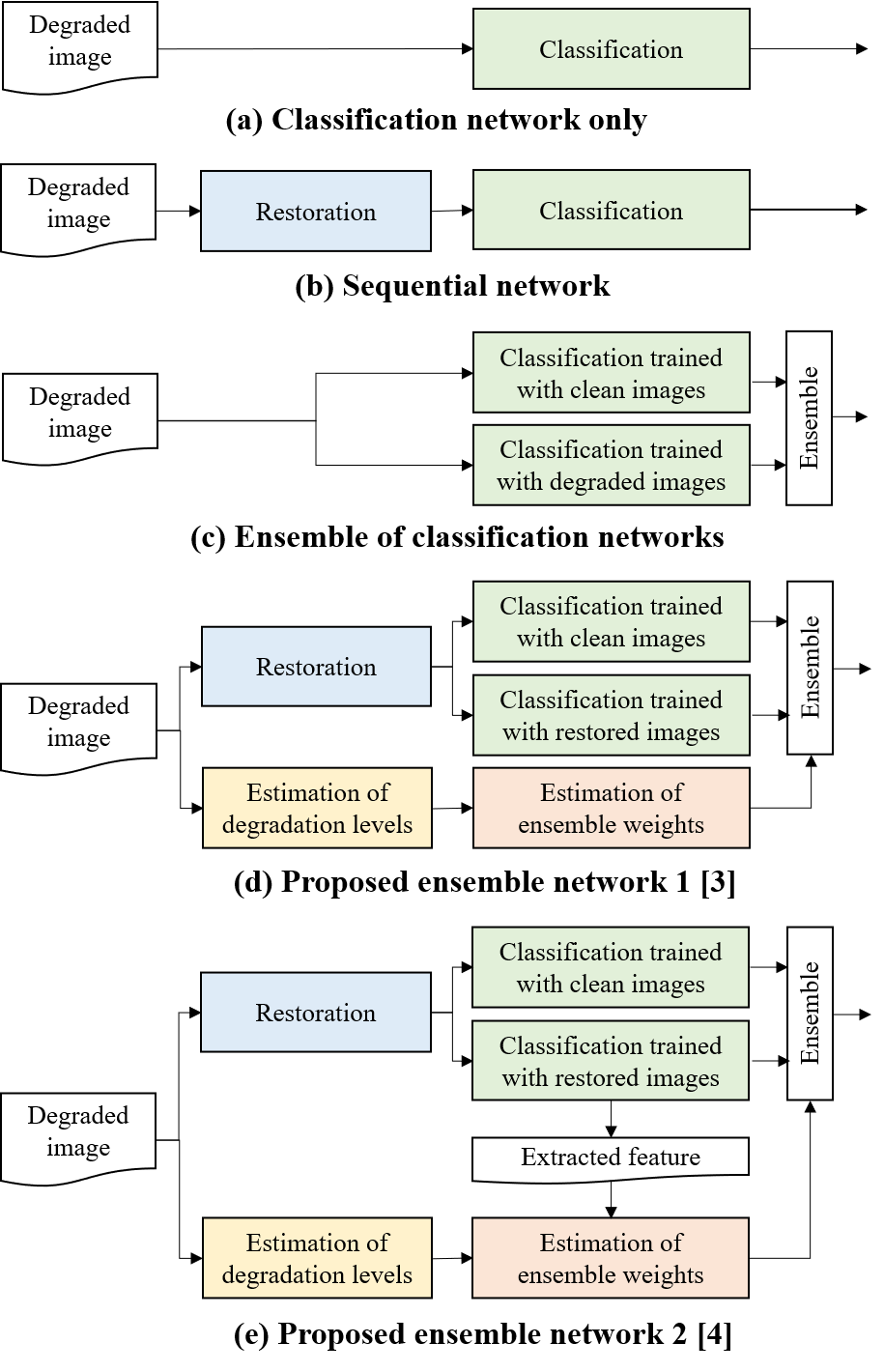

1. Ensemble Approach [3], [4]

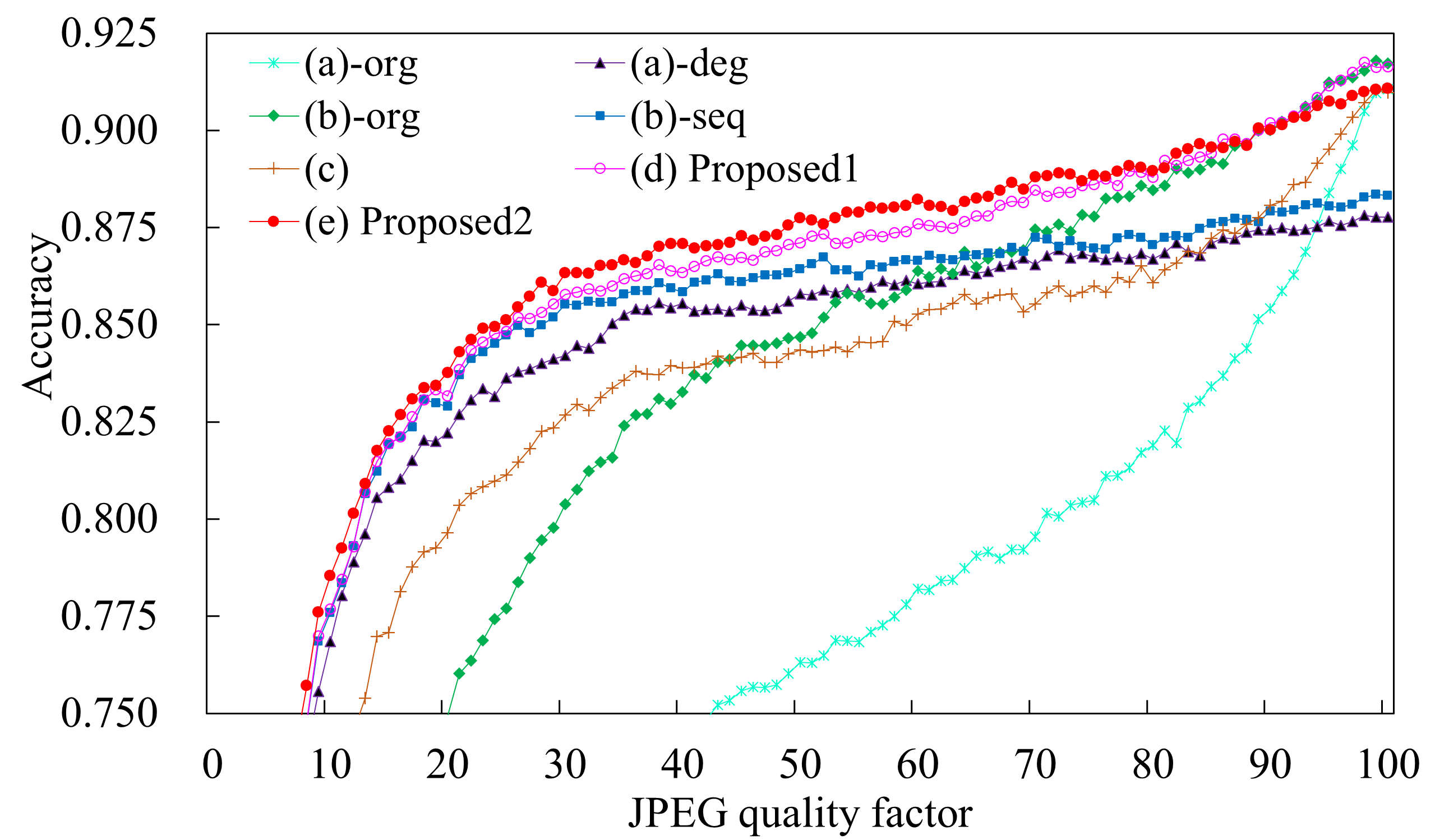

We propose a convolutional neural network to classify degraded images by using a restoration network and an ensemble learning in [3], [4]. Ensemble weights are automatically estimated by depending on the estimated degradation level. The proposed network can classify degraded images over various levels of degradation well.

Fig. 1 Classification networks of degraded images.

Fig. 2 Accuracy of JPEG CIFAR-10 with VGG-like.

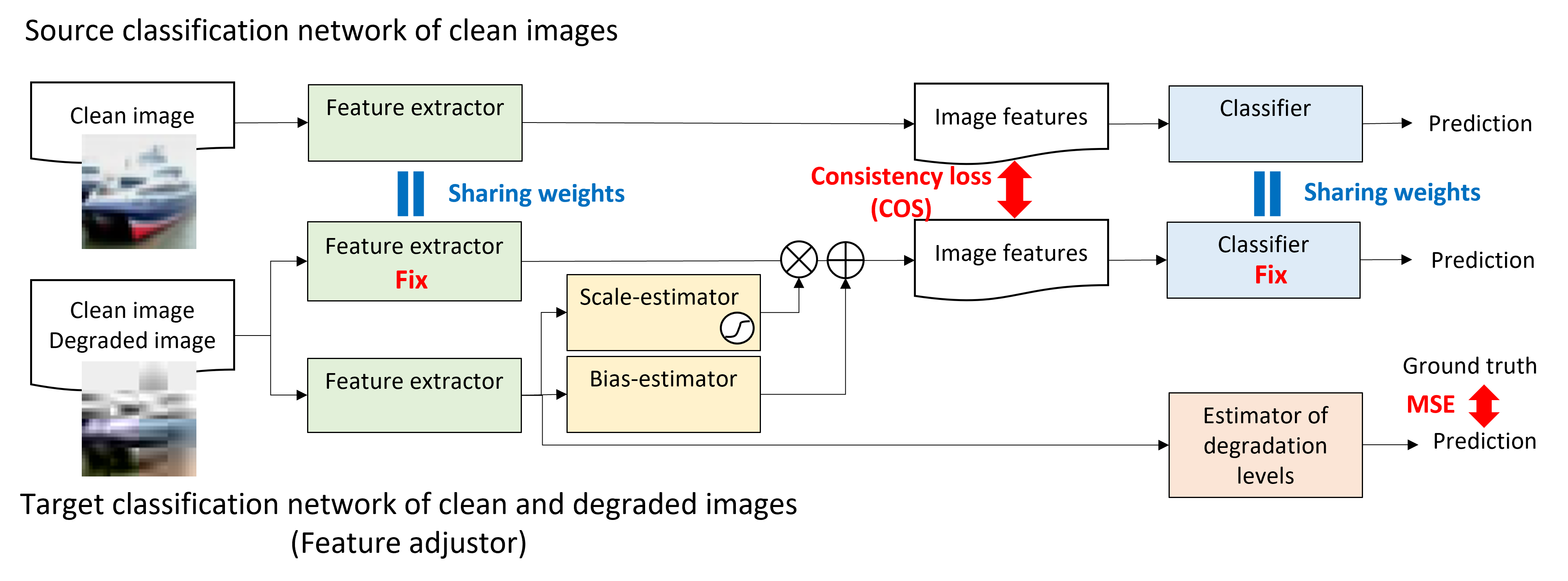

2. Feature Adjustor [6]

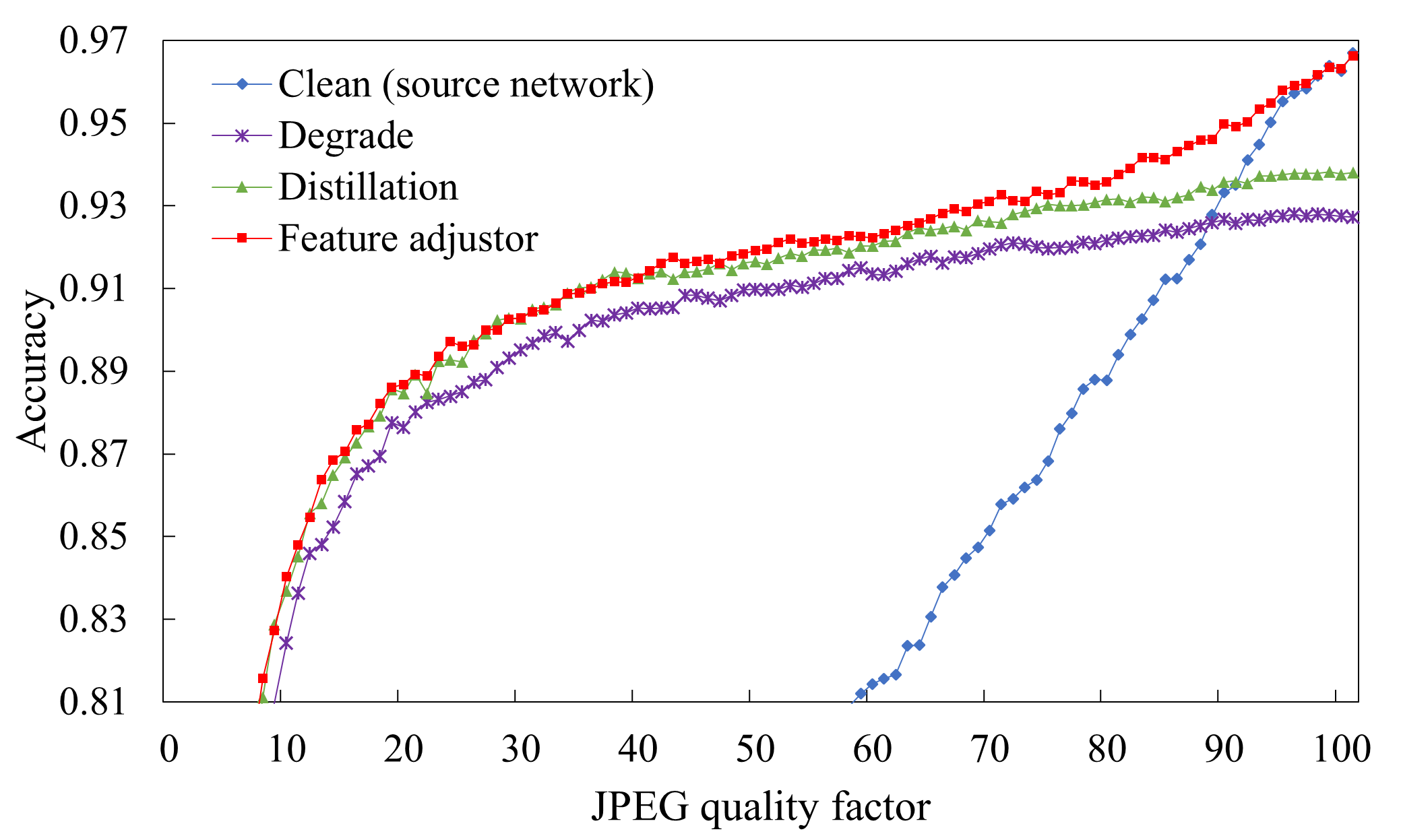

The ensemble approach might underperform the classification network trained with clean images only. To overcome this deficit, we propose a network to learn the classification of degraded images and degradation levels of degraded images as multi-task learning in [6], as seen in Fig.3. The proposed network is based on the consistency regularization of image features between clean images and degraded images. The proposed network has enough ability to classify degraded images without sacrificing the performance for clean images.

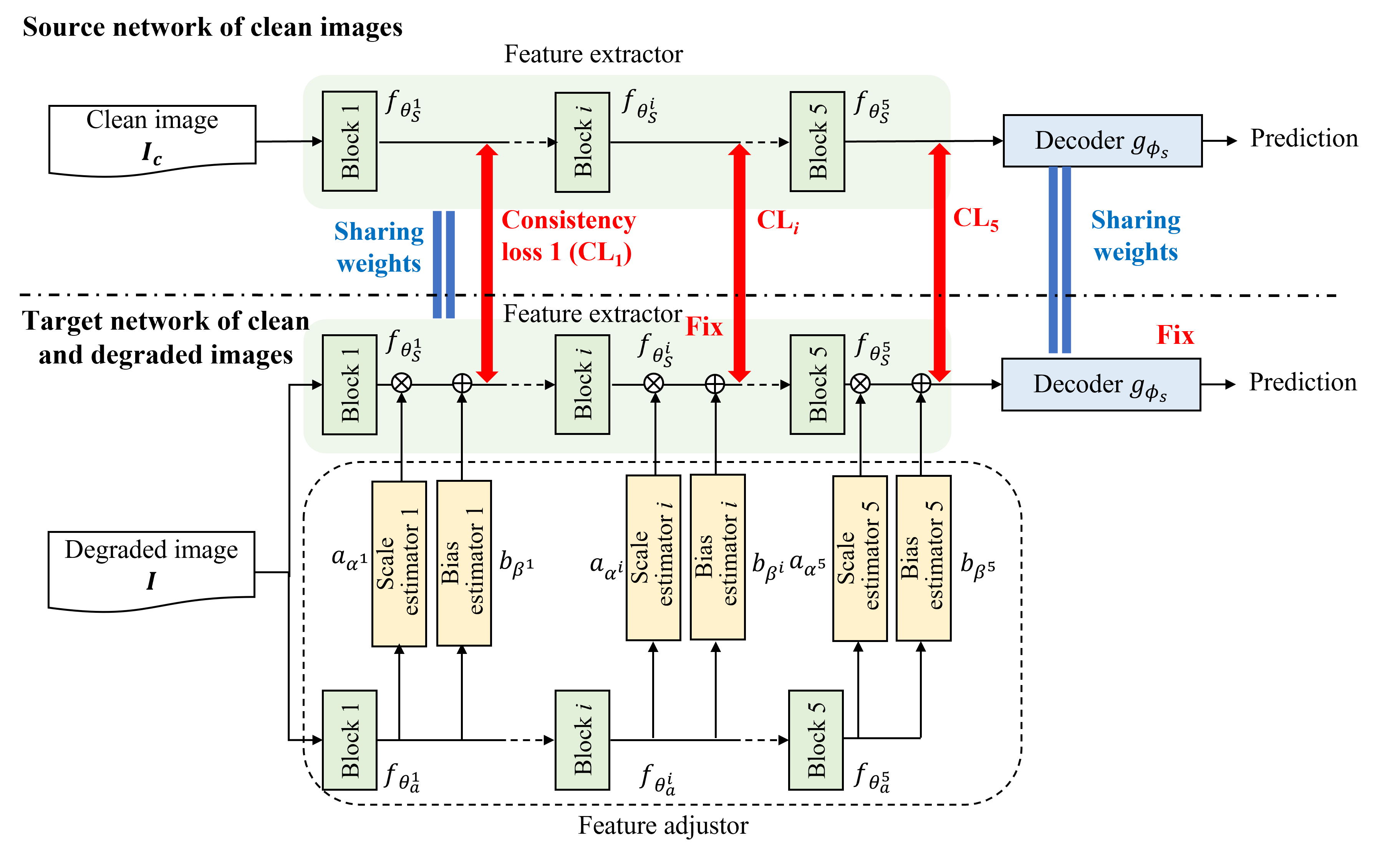

Fig. 3 Feature adjustor.

Fig. 4 Accuracy of JPEG CIFAR-10 with ShakePyramidNet.

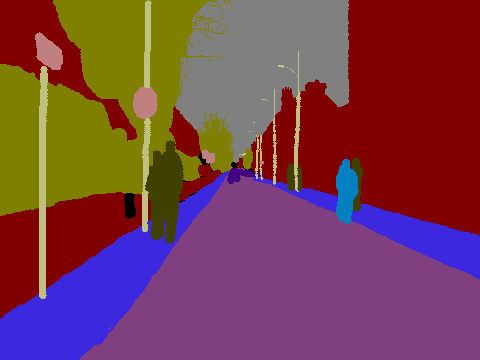

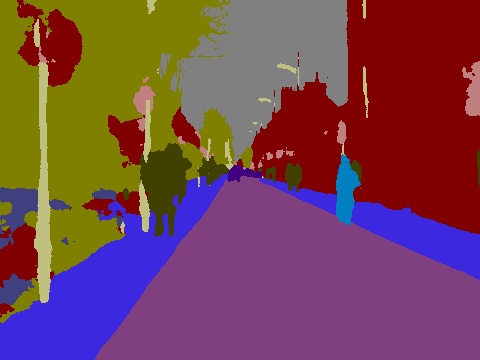

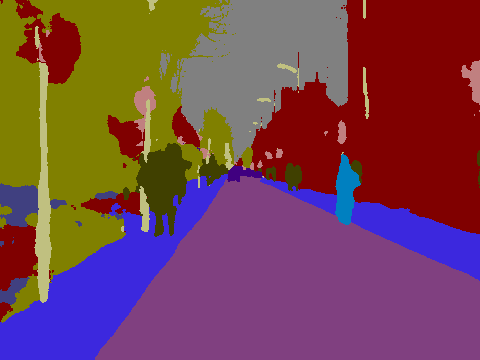

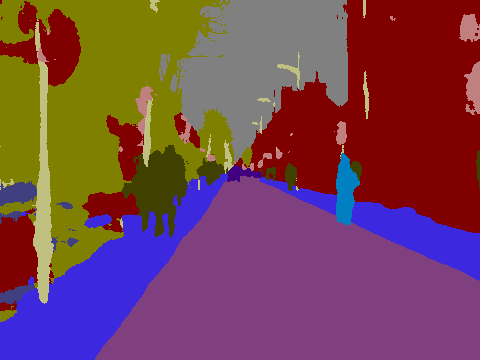

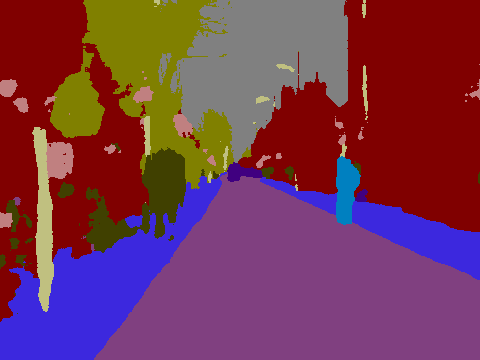

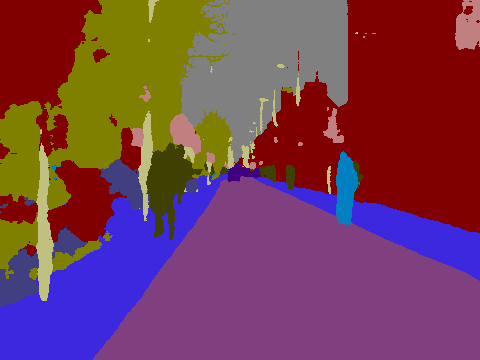

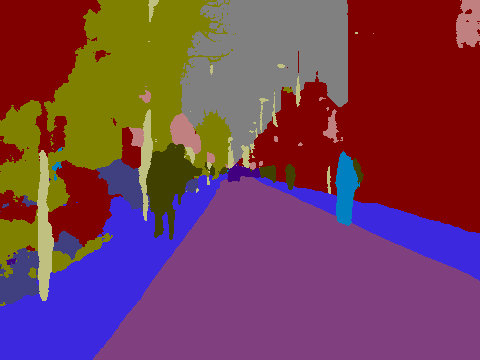

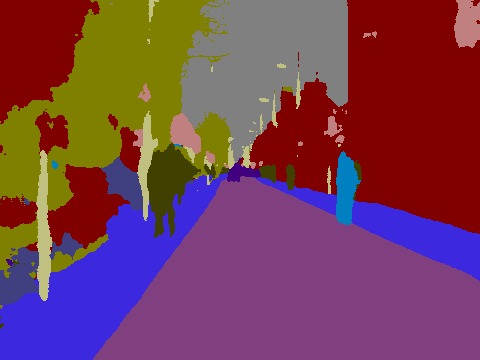

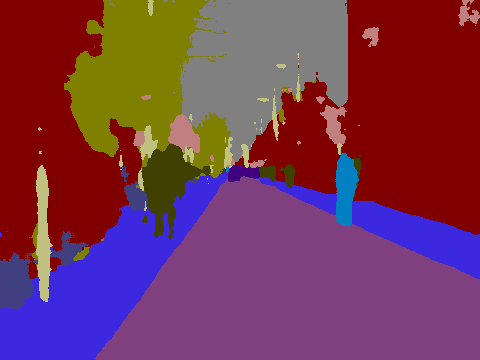

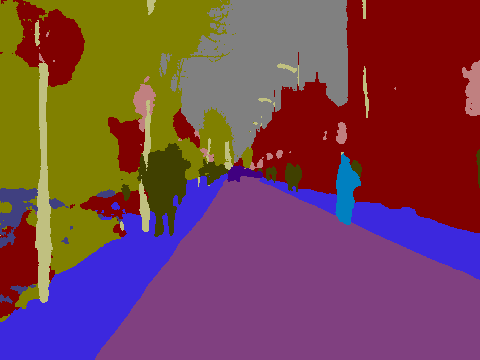

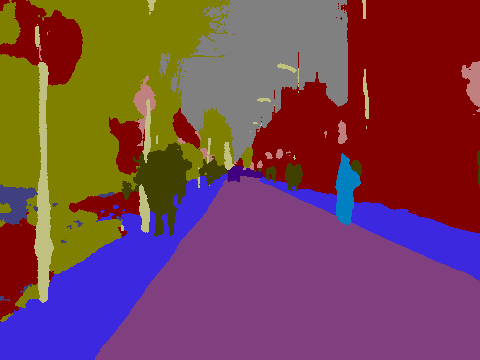

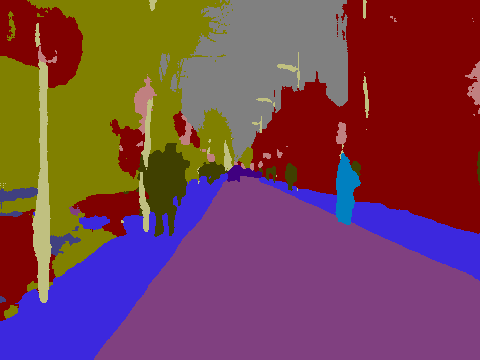

3. Layer-Wise Feature Adjustor [7]

The previous feature adjustor, which we call a single-layer feature adjustor, focuses on the final image features of a feature extractor. We extend the feature adjustor to the muti-layer of image features called the "layer-wise feature adjustor." Figure 5 shows the structure of the layer-wise feature adjustor based on SegNet, which is a type of semantic segmentation network. Unlike the single-layer feature adjustor, the layer-wise feature adjustor does not infer any degradation levels.

Fig. 5 Layer-wise feature adjustor.

| JPEG quality factor | Clean | Degrade | Single-layer | Layer-wise |

|---|---|---|---|---|

| Clean images | 0.575 | 0.543 | 0.575 | 0.575 |

| 90 | 0.572 | 0.543 | 0.572 | 0.574 |

| 70 | 0.567 | 0.541 | 0.569 | 0.573 |

| 50 | 0.563 | 0.539 | 0.565 | 0.572 |

| 30 | 0.545 | 0.534 | 0.552 | 0.566 |

| 10 | 0.460 | 0.505 | 0.506 | 0.536 |

| Average | 0.547 | 0.534 | 0.557 | 0.566 |

| JPEG quality factor | Clean Image | Quality 90 | Quality 50 | Quality 10 |

|---|---|---|---|---|

| Input |  |

|

|

|

| Ground truth |  |

|

|

|

| Clean |  |

|

|

|

| Degrade |  |

|

|

|

| Layer-wise |  |

|

|

|

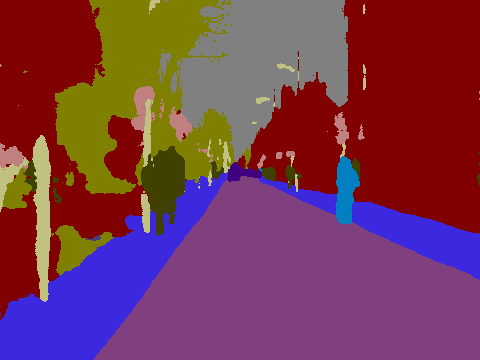

Fig. 6 Sample images of the segmentation results for CamVid.

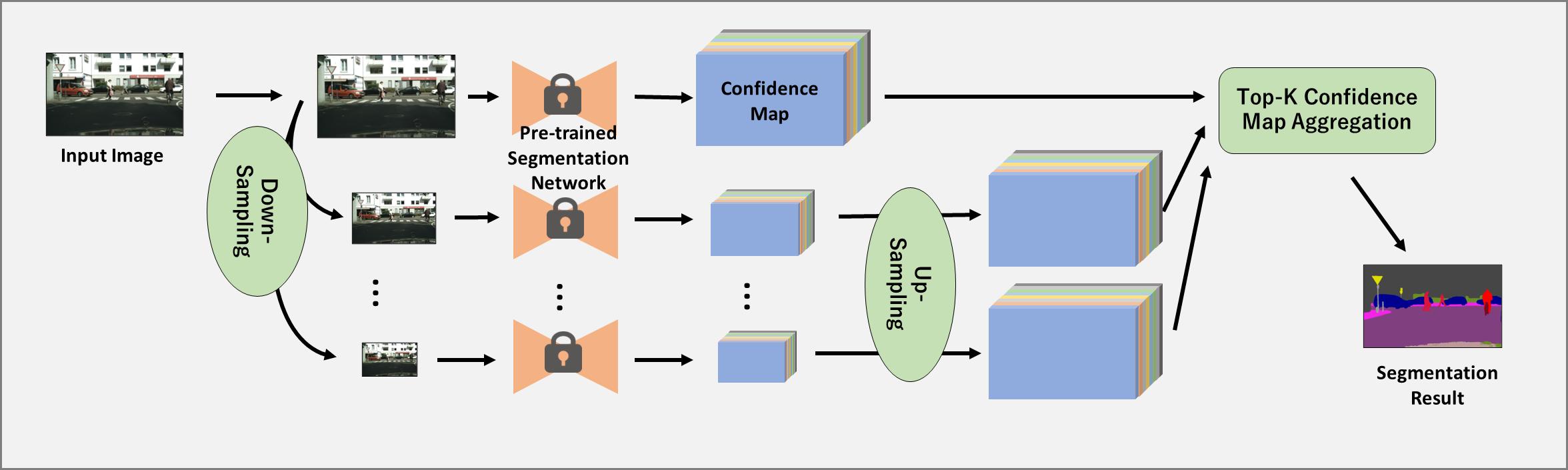

4. Multi-Scale and Top-K Confidence Map Aggregation [8]

This research presents a simple framework that makes the semantic segmentation algorithms robust against image degradation without re-training the original semantic segmentation CNN. Our key observation is that the down-sampled image is less sensitive to various types of degradation than the original-sized image. Therefore, we adopt a multi-scale test time augmentation. Then, inferred confidence maps are aggregated to obtain the final inference result. We also propose a top-K confidence map aggregation, which averages the top k-th confidence values, to further performance improvement.

Fig. 7 Multi-Scale Test-Time Augmentation Architecture.

Publication

[1] CNN-based Classification of Degraded Images

Kazuki Endo, Masayuki Tanaka, Masatoshi Okutomi,

Image Processing: Algorithms and Systems Conference, at IS&T Electronic Imaging 2020.

[PDF (move to ingenta CONNECT)]

[Reproduction Code]

[2] 畳み込みニューラルネットワークを用いた劣化画像のクラス分類

遠藤和紀, 田中正行, 奥富正敏,

第26回 画像センシングシンポジウム (SSII2020), June, 2020.

[Poster(PDF)]

[Reproduction Code]

[3] Classifying degraded images over various levels of degradation

Kazuki Endo, Masayuki Tanaka, Masatoshi Okutomi,

Proceedings of IEEE International Conference on Image Processing (ICIP2020), October, 2020.

[PDF (move to arXiv)]

[Reproduction Code]

[4] CNN-Based Classification of Degraded Images with Awareness of Degradation Levels

Kazuki Endo, Masayuki Tanaka, Masatoshi Okutomi,

IEEE Transactions on Circuits and Systems for Video Technology, Early Access, 2020.

[Abstract in IEEE Xplore]

[PrePrint PDF]

[Reproduction Code]

[5] 多様な劣化水準に対応可能な劣化画像のクラス分類ネットワーク

遠藤和紀, 田中正行, 奥富正敏,

第27回 画像センシングシンポジウム (SSII2021), June, 2021.

[Reproduction Code]

[6] CNN-Based Classification of Degraded

Images Without Sacrificing Clean Images

Kazuki Endo, Masayuki Tanaka, Masatoshi Okutomi,

IEEE Access, Early Access, 2021.

[Abstract&PDF in IEEE Xplore]

[Reproduction Code]

[Model Files (pth files, 17GB)]

[7] Semantic Segmentation of Degraded Images Using Layer-Wise Feature Adjustor

Kazuki Endo, Masayuki Tanaka, Masatoshi Okutomi,

Proceedings of Winter Conference on Applications of Computer Vision (WACV2023), January 2023(to appear).

[PDF(forthcoming)]

[Reproduction Code]

[8] Top-K Confidence Map Aggregation for Robust Semantic Segmentation Against Unexpected Degradation

Yu Moriyasu, Takashi Shibata, Masayuki Tanaka, Masatoshi Okutomi,

IEEE International Conference on Consumer Electronics, 2023.

[Abstract in IEEE Xplore]

[PDF]

[Reproduction Code]