Single Image Deraining Network with Rain Embedding Consistency and Layered LSTM

Yizhou Li, Yusuke Monno, Masatoshi Okutomi

IEEE Winter Conference on Applications of Computer Vision 2022 (WACV 2022)

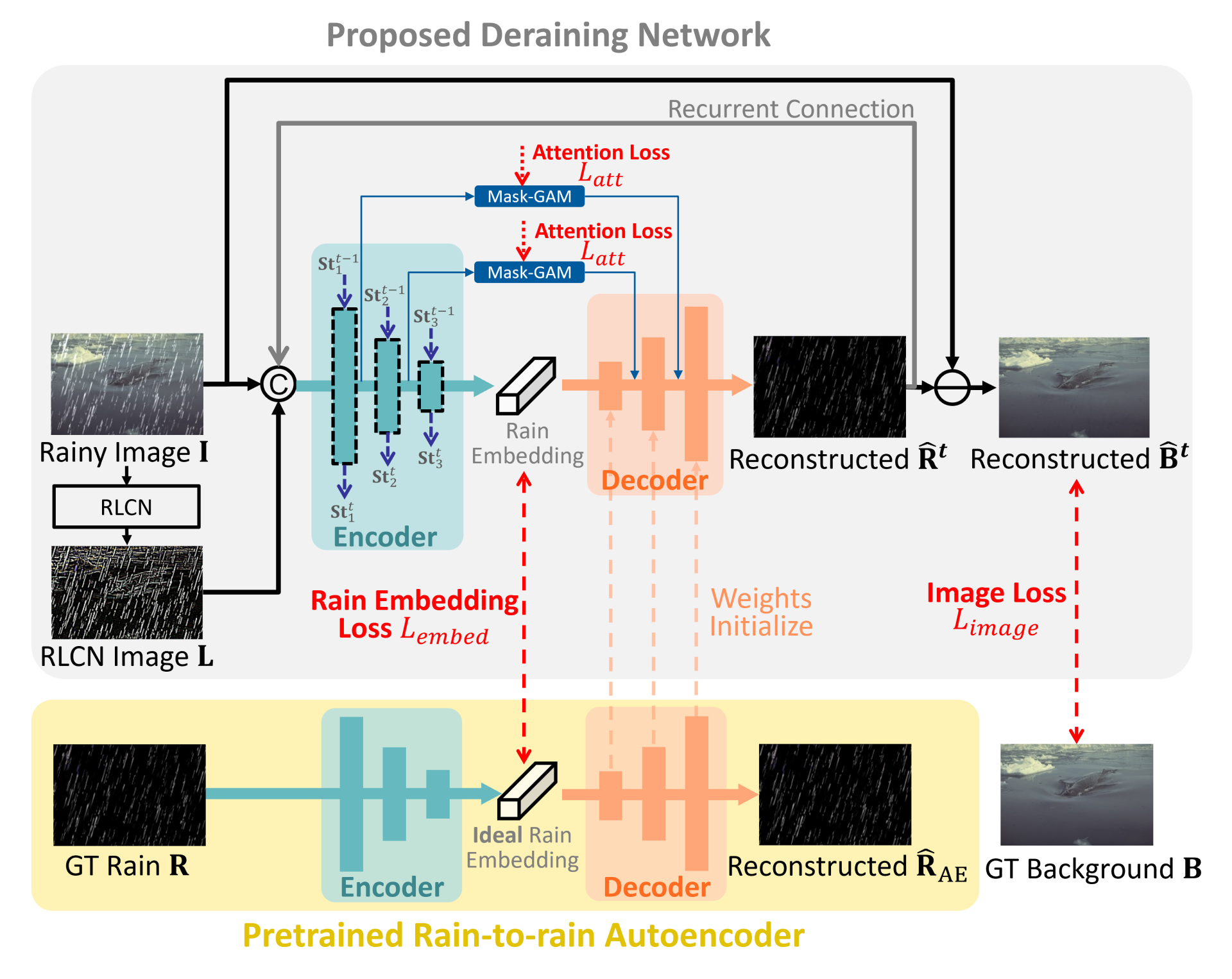

Method Overview

An overview of the proposed single image deraining network.

Abstract

Single image deraining is typically addressed as residual learning to predict the rain layer from an input rainy image. For this purpose, an encoder-decoder network draws wide attention, where the encoder is required to encode a high-quality rain embedding which determines the performance of the subsequent decoding stage to reconstruct the rain layer. However, most of existing studies ignore the significance of rain embedding quality, thus leading to limited performance with over/under-deraining. In this paper, with our observation of the high rain layer reconstruction performance by an rain-to-rain autoencoder, we introduce the idea of ``Rain Embedding Consistency" by regarding the encoded embedding by the autoencoder as an ideal rain embedding and aim at enhancing the deraining performance by improving the consistency between the ideal rain embedding and the rain embedding derived by the encoder of the deraining network. To achieve this, a Rain Embedding Loss is applied to directly supervise the encoding process, with a Rectified Local Contrast Normalization (RLCN) as the guide that effectively extracts the candidate rain pixels. We also propose Layered LSTM for recurrent deraining and fine-grained encoder feature refinement considering different scales. Qualitative and quantitative experiments demonstrate that our proposed method outperforms previous state-of-the-art methods particularly on a real-world dataset.

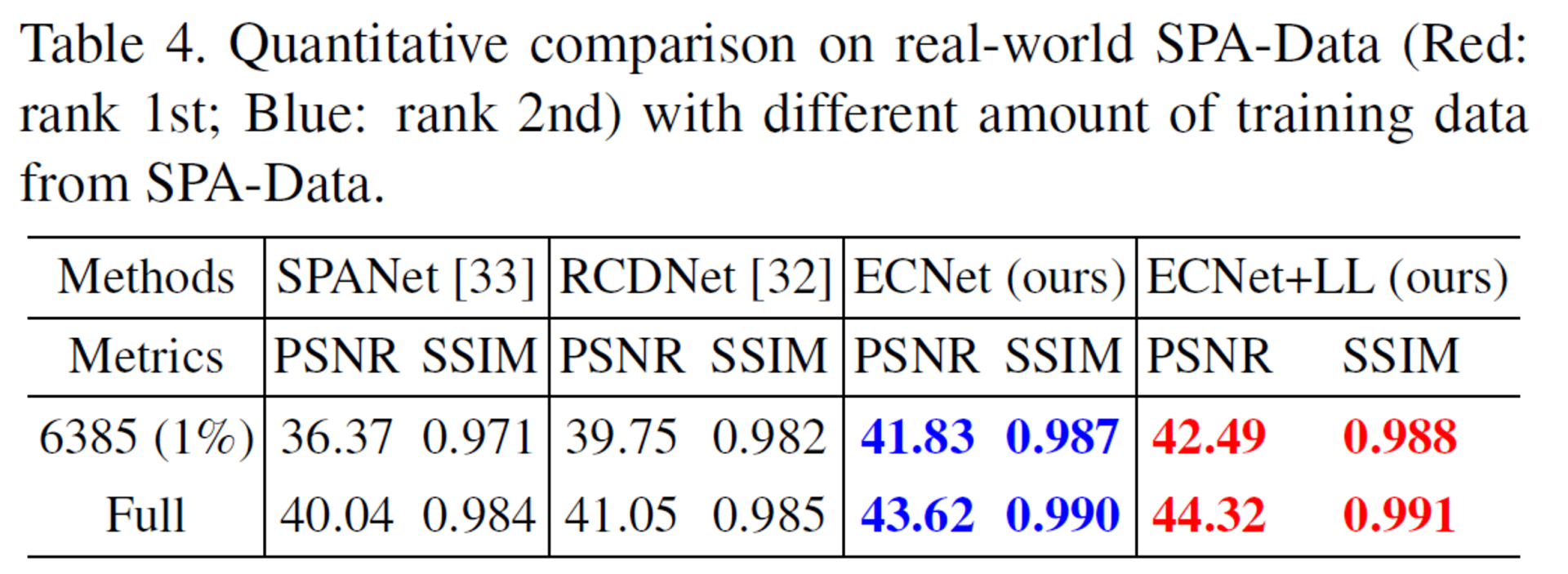

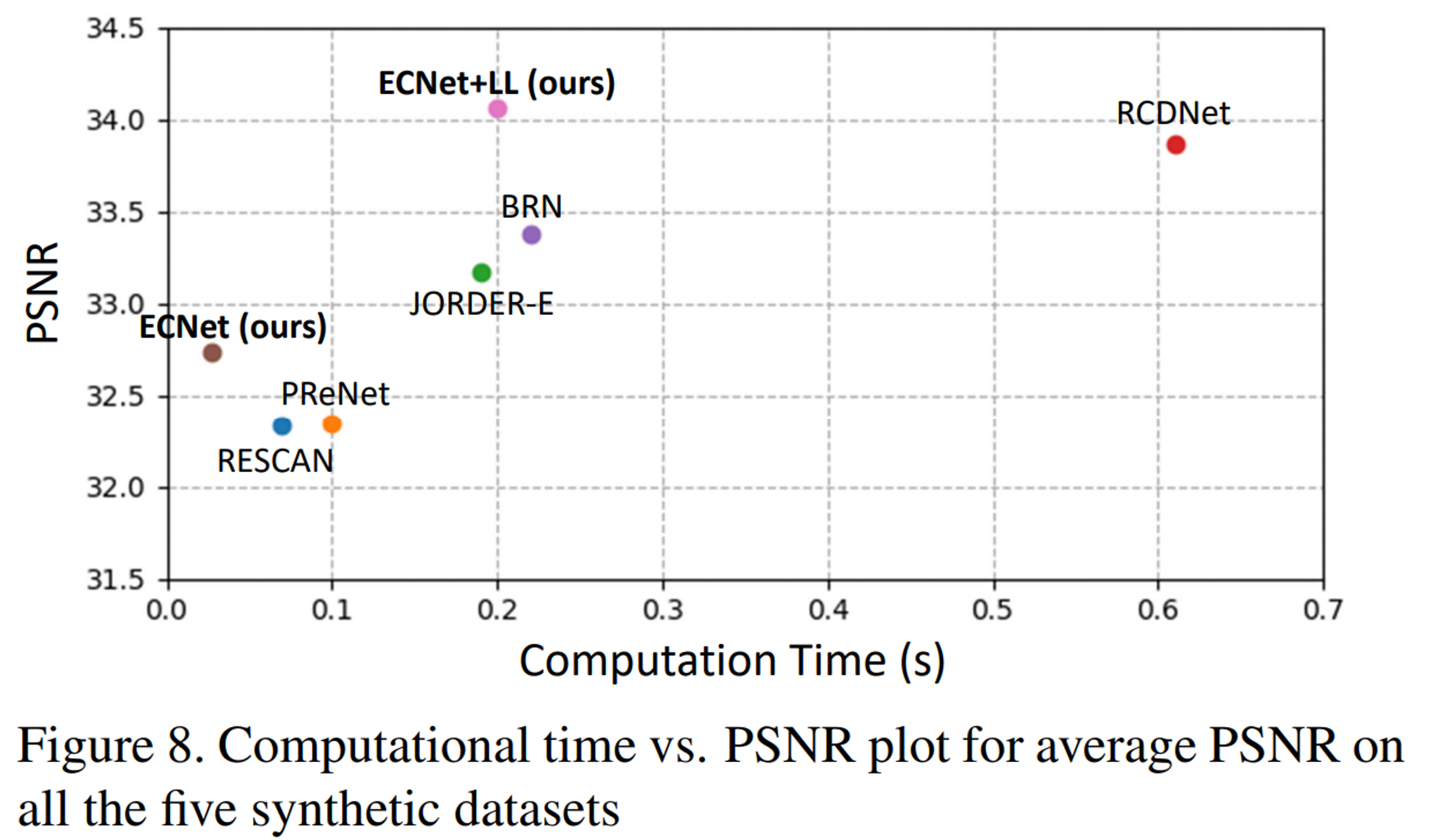

Quantitative Results

Here are the quantitative evaluations on a real-world dataset [1] and computational efficiency, compared with existing state-of-the-art methods.

The quantitative results on a real-world dataset (SPA-Data [1]).

Good performance with high computational efficiency of proposed two methods.

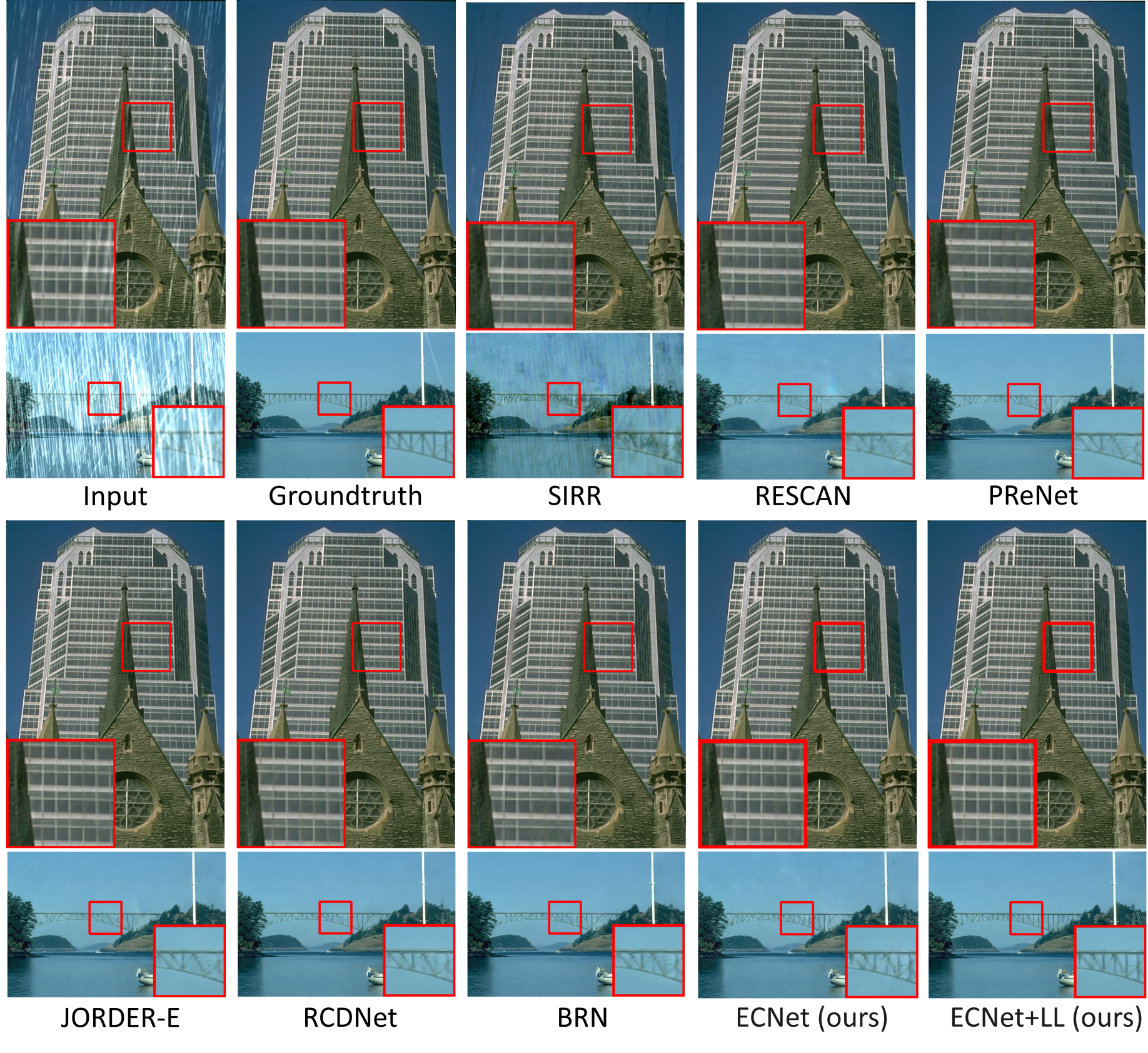

Qualitative Results

Here are the single image deraining samples on a real-world dataset [1] and two synthetic datasets [2], compared with existing state-of-the-art methods. Please refer to supplementary material for more detailed results.

The results on a real-world dataset (SPA-Data [1])

The results on two synthetic datasets (Rain100L and Rain100H [2]).

Publications

Single Image Deraining Network with Rain Embedding Consistency and Layered LSTM

Yizhou Li, Yusuke Monno, Masatoshi Okutomi

IEEE Winter Conference on Applications of Computer Vision (WACV 2022, accepted)

[arxiv], [supp], [code]

Related Publications

Recurrent RLCN-Guided Attention Network for Single Image Deraining

Yizhou Li, Yusuke Monno, Masatoshi Okutomi

International Conference on Machine Vision Applications (MVA 2021)

[link]

References

[1] Wenhan Yang, Robby T Tan, Jiashi Feng, Zongming Guo, Shuicheng Yan, and Jiaying Liu. Joint rain detection and removal from a single image with contextualized deep networks. IEEE Trans. on Pattern Analysis and Machine Intelligence, 42(6):1377–1393, 2019. [Github]

[2] Tianyu Wang, Xin Yang, Ke Xu, Shaozhe Chen, Qiang

Zhang, and Rynson WH Lau. Spatial attentive single-image

deraining with a high quality real rain dataset. In Proc. of

IEEE Conf. on Computer Vision and Pattern Recognition

(CVPR), pages 12270–12279, 2019. [Github]

Contact

Yizhou Li: yli[at]ok.sc.e.titech.ac.jp

Yusuke Monno: ymonno[at]ok.sc.e.titech.ac.jp

Masatoshi Okutomi: mxo[at]ctrl.titech.ac.jp

|